Welcome to the second post in our Pi-IoT series.

Technology

In our previous post, we created a kubernetes cluster using raspberry pi’s. Lets test that cluster now

Deploying a Test Service

Log into the master node and deploy

|

1 2 3 |

export KUBECONFIG=$HOME/admin.conf kubectl run hypriot --image=hypriot/rpi-busybox-httpd --replicas=3 --port=80 |

kubectl starts a set of pods called hypriot from the hypriot/rpi-busybox-httpd image. Next, lets expose the pods in a service

|

1 2 |

kubectl expose deployment hypriot --port 80 |

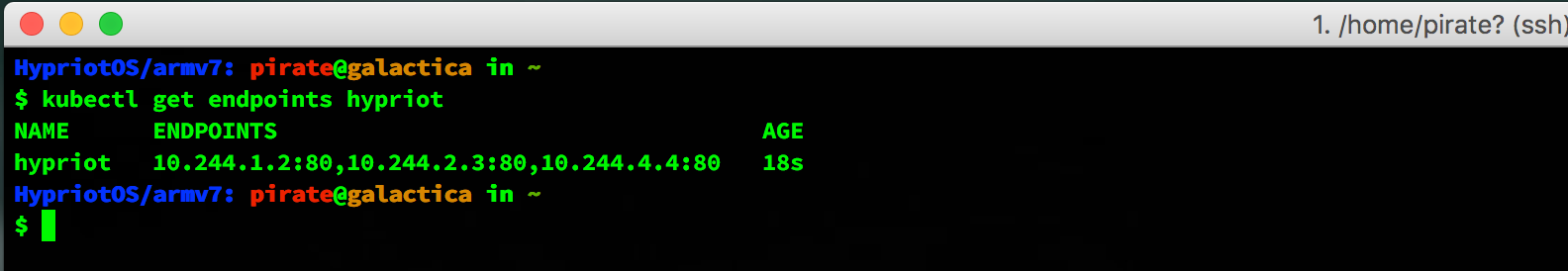

The status can be checked like:

|

1 2 |

kubectl get endpoints hypriot |

The output should look something like:

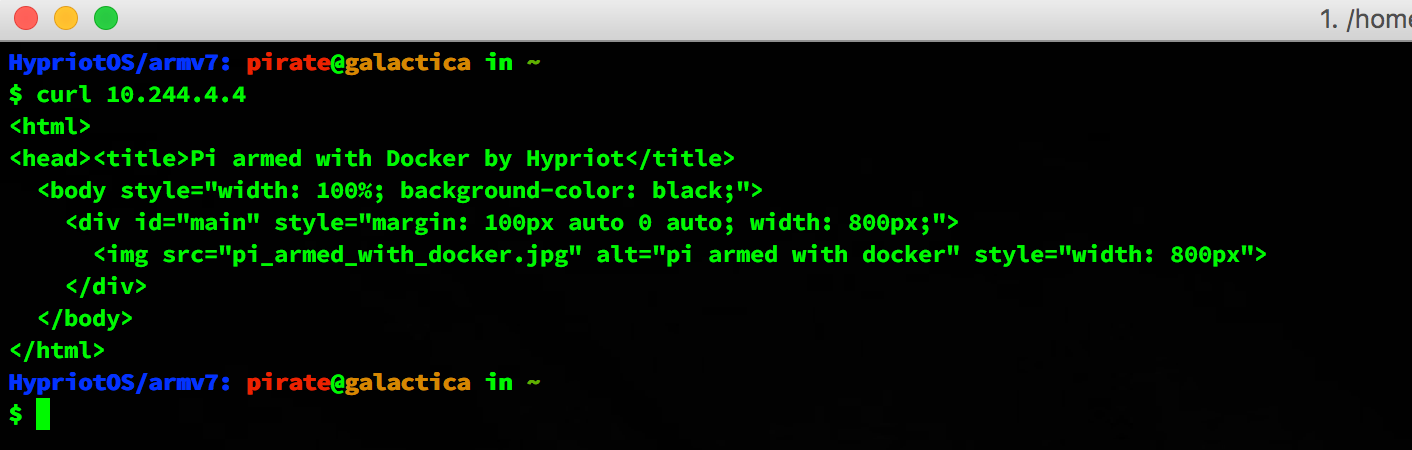

You can curl the service to verify it is running

Access the Service from Outside the Cluster

Lets display a sample Ingress Controller to manage incoming requests to our service. We will use Traefik as the load balancer. Lets setup the RBAC roles and deploy an example

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 |

cat > traefik-with-rbac.yaml <<EOF --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller rules: - apiGroups: - "" resources: - pods - services - endpoints verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch --- apiVersion: v1 kind: ServiceAccount metadata: name: traefik-ingress-controller namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: traefik-ingress-controller subjects: - kind: ServiceAccount name: traefik-ingress-controller namespace: kube-system EOF cat > traefik-k8s-rbac-example.yaml <<EOF apiVersion: v1 kind: Deployment apiVersion: extensions/v1beta1 metadata: name: traefik-ingress-controller namespace: kube-system labels: k8s-app: traefik-ingress-controller spec: replicas: 1 selector: matchLabels: k8s-app: traefik-ingress-controller template: metadata: labels: k8s-app: traefik-ingress-controller annotations: scheduler.alpha.kubernetes.io/tolerations: | [ { "key": "dedicated", "operator": "Equal", "value": "master", "effect": "NoSchedule" } ] spec: serviceAccountName: traefik-ingress-controller terminationGracePeriodSeconds: 60 hostNetwork: true nodeSelector: nginx-controller: "traefik" containers: - image: hypriot/rpi-traefik name: traefik-ingress-controller resources: limits: cpu: 200m memory: 30Mi requests: cpu: 100m memory: 20Mi ports: - name: http containerPort: 80 hostPort: 80 - name: admin containerPort: 8888 args: - --web - --web.address=localhost:8888 - --kubernetes EOF kubectl apply -f traefik-with-rbac.yaml kubectl apply -f traefik-k8s-rbac-example.yaml |

Label the node you want to be the load balancer for the traefik ingress controller

NOTE: If you want to use the master node for the load balancer, you will need to remove the “NoSchedule” taint like:

|

1 2 |

$ kubectl taint nodes galactica node-role.kubernetes.io/master:NoSchedule- |

otherwise just

|

1 2 |

kubectl label node nginx-controller=traefik |

Finally, create an Ingress object that makes Traefik load balance on port 80 with the hypriot service:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

cat > hypriot-ingress.yaml <<EOF apiVersion: extensions/v1beta1 kind: Ingress metadata: name: hypriot spec: rules: - http: paths: - path: / backend: serviceName: hypriot servicePort: 80 EOF kubectl apply -f hypriot-ingress.yaml |

Once all pods are in a Running state:

|

1 2 |

kubectl get pods |

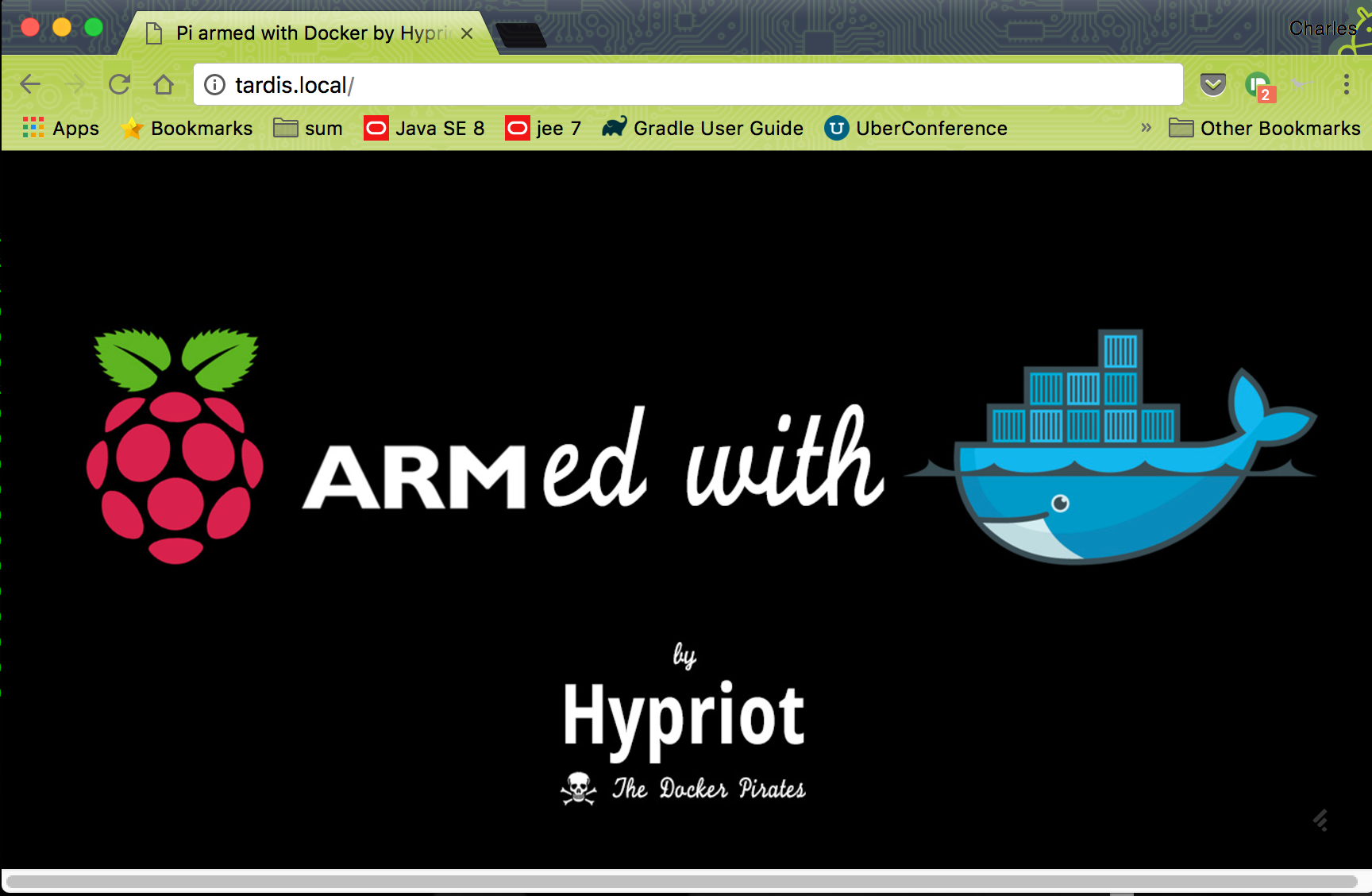

Open the load balancers node in a browser and you will see the web page:

Social Media