Feeling dissatisfied with the last post, I really wanted to have a working k8s raspberry pi cluster. At the point, I turned my attention to another networking plugin called weave. If you are following along in the series, we are going back to the beginning and rebuilding the cluster.

I had tried weave in the past with no luck but decided to give it another try. My initial install confirmed what I had found before, it just wasn’t working properly but this time I wasn’t ready to give up just yet. After a bit of research, I discovered a couple of problems:

- A bug in the hypriot install caused all my nodes to have the same machineId (see issue here)

- Additionally, there was an issue using the

hostPortattribute for exposing services. At this point, it was requiring me to usehostNetworking: truein order to expose ports to the outside world. Fortunately, a workaround is available here

With these workarounds documented, let’s setup our RPI cluster.

Flash HypriotOS on your SD cards

You can find the latest release at HypriotOS. They also provide a pretty handy flash tool which you can use like:

|

1 2 3 |

flash --hostname pecan-pi https://github.com/hypriot/image-builder-rpi/releases/download/v1.5.0/hypriotos-rpi-v1.5.0.img.zip |

The --hostname enterprise is the name given to the Pi. You can also add other parameters. You can add WIFI information if you are using WIFI for your network. -s YOURSSID -p YourNetworkPassord.

After flashing the OS to the SD cards, install them in your Pi’s, boot them up and log in via SSH

|

1 2 3 |

ssh pirate@pecan-pi.local |

with a default password of hypriot

Updating the Machine Id

Now will will apply our first workaround by updating the /etc/machineId

On each node:

|

1 2 3 |

sudo su - |

then

|

1 2 3 |

dbus-uuidgen > /etc/machine-id |

Install Kubernetes

To install kubernetes, we will add the official APT kubernetes repository on each node. This will require root privileges. Using sudo you can set your user to root like

|

1 2 3 |

sudo su - |

then

|

1 2 3 4 |

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list |

then just install kubeadm on every node

|

1 2 3 |

apt-get update && apt-get install -y kubeadm |

Initialize Kubernetes on the master node

|

1 2 3 |

$ kubeadm init |

- If you are using the WIFI network instead of ethernet, you need to add

--apiserver-advertise-address 192.168.1.223where 192.168.1.223 is changed to the actual IP address on your network of the master Pi. You can find this by running:

|

1 2 3 |

$ ifconfig |

On the master node, exit su and by running the commands below you can now start using your cluster as a regular user:

|

1 2 3 4 5 |

sudo cp /etc/kubernetes/admin.conf $HOME/ sudo chown $(id -u):$(id -g) $HOME/admin.conf export KUBECONFIG=$HOME/admin.conf |

Setup weave as the Pod network driver

Once kubernetes has initialized (and this can take a long time), it’s time to setup weave as the pod network driver

On the master node, simply:

|

1 2 3 |

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')" |

Joining the Cluster

On each other node, execute the kubeadm join command given in the output to join the cluster. (Note: master node’s hostname can be used instead of IP). It will look something like:

|

1 2 3 |

$ kubeadm join --token=bb14ca.e8bbbedf40c58788 192.168.0.34 |

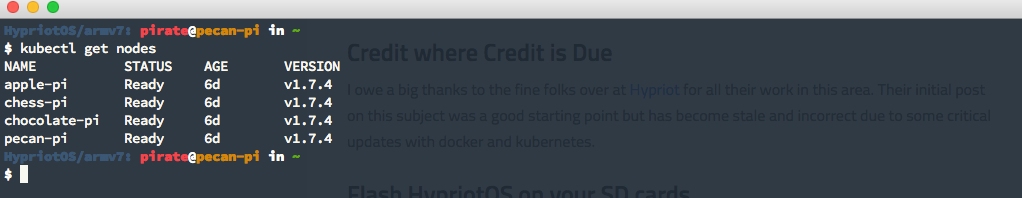

After some seconds, you should see all nodes in your cluster when executing the following on the master node:

|

1 2 3 |

$ kubectl get nodes |

Your terminal should look similar to this:

Fixing the hostPort Problem

Now lets fix the issue with using hostPort. On each node:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

cd /tmp && mkdir cni-plugins && cd cni-plugins && \ wget https://github.com/containernetworking/plugins/releases/download/v0.6.0/cni-plugins-arm-v0.6.0.tgz && \ tar zxfv cni-plugins-arm-v0.6.0.tgz sudo cp /tmp/cni-plugins/portmap /opt/cni/bin/ sudo sh -c 'cat >/etc/cni/net.d/10-mynet.conflist <<-EOF { "cniVersion": "0.3.0", "name": "mynet", "plugins": [ { "name": "weave", "type": "weave-net", "hairpinMode": true }, { "type": "portmap", "capabilities": {"portMappings": true}, "snat": true } ] } EOF' sudo rm /etc/cni/net.d/10-weave.conf |

To apply the changes, on the master node, run:

|

1 2 3 |

kubectl delete po --all --namespace=kube-system |

Once

|

1 2 3 |

kubectl get po --all-namespaces -o wide |

shows everything in a Running state, you now have a working kubernetes RPI cluster.

Using Hypriot 1.9.0 and latest kubernetes.

Following your steps I’m getting these errors on master (node1) when worker joins the cluster

Message from syslogd@node1 at Jun 24 21:44:54 …

kernel:[12801.482619] Internal error: Oops: 80000007 [#1] SMP ARM

Message from syslogd@node1 at Jun 24 21:44:54 …

kernel:[12801.561165] Process weaver (pid: 5477, stack limit = 0x8916e210)

Message from syslogd@node1 at Jun 24 21:44:54 …

kernel:[12801.573901] Stack: (0x8916f9f0 to 0x89170000)

Message from syslogd@node1 at Jun 24 21:44:54 …

kernel:[12801.586353] f9e0: 00000000 00000000 0d08080a 8916fa90

Message from syslogd@node1 at Jun 24 21:44:54 …

kernel:[12801.610404] fa00: 0000801a 00003cd4 9c95b090 9c95b058 8916fa44 8916fa20 80c7b140 9685f580

Seems like some problem somewhere 🙂

I haven’t tried to run that version of Hypriot with the latest k8s and docker. We will be looking to do some updates and workarounds soon.

Funny though, flannel just works nice.